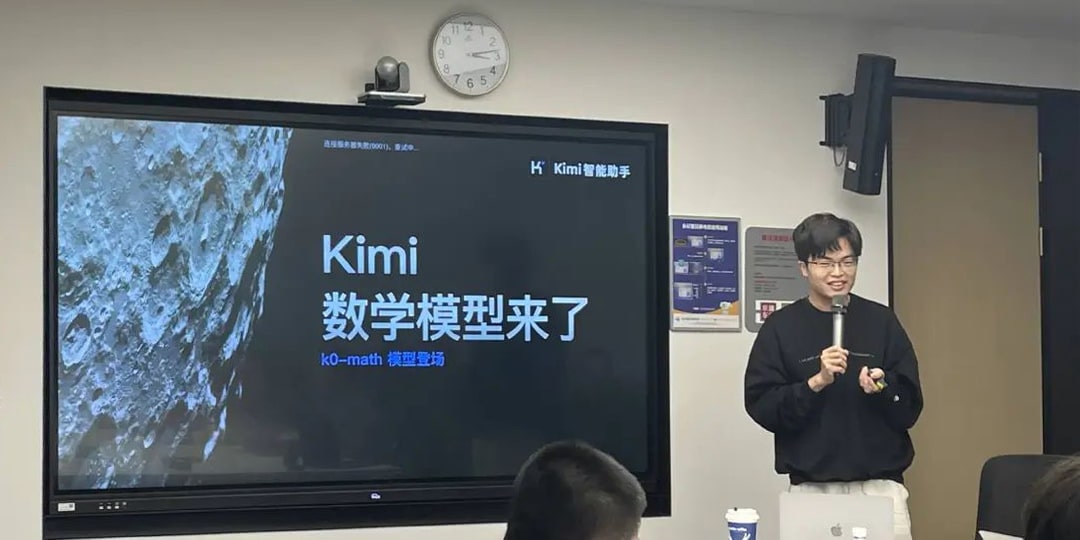

Kimi K2’s launch on July 16 drew global attention after Nature described it as “another DeepSeek moment.”

Why does that matter? Because Kimi K2 follows the DeepSeek playbook: high performance, low cost, and truly open-source. Most importantly, it delivers.

Kimi K2 is purpose-built for agentic artificial intelligence.

Kimi algorithm engineer Justin Wong outlined the intent in a blog post: the team aims to shift human-AI interaction from chat-first to artifact-first. That is, producing tangible outputs like 3D models or spreadsheets, rather than mere conversation threads.

In other words, the goal is to build a model that acts, not just chats.

That philosophy informs Kimi’s design. Where DeepSeek-R1 emphasized reasoning and only later introduced tool-use features, Kimi K2 did the reverse. While it also refined stylistic writing, it downplayed explicit reasoning such as showing intermediate thought steps to focus instead on agentic capabilities. “That’s a rare choice in the field,” one industry expert said.

Kimi K2 is optimized to call external tools quickly, based on chat context, maximizing task speed and output quality. It’s built to integrate with software like browsers, PowerPoint, Excel, and 3D rendering tools. Developers can also connect it to agent frameworks such as Owl, Cline, or RooCode for autonomous programming tasks.

Its real-world performance reflects this focus. With a simple prompt, Kimi K2 can generate rotatable 3D Earth models, simulate day-night cycles over mountain ranges, create stock trading dashboards, or render particle galaxies. It can also produce PowerPoint decks or analyze large datasets to output trends, visualizations, and statistical summaries with minimal input.

Another standout feature is its cost structure.

Despite performance close to Claude-class models, Kimi K2 is substantially cheaper. Its API is priced at RMB 4 (USD 0.56) per million input tokens and RMB 16 (USD 2.24) per million output tokens. In contrast, Claude 4 Sonnet’s programming-focused API costs USD 3 and USD 15, respectively, making Kimi K2 more than 75% less expensive overall.

That matters in a vertical where AI-powered programming is exploding. Cursor, one of the top players, saw annual recurring revenue (ARR) surge from USD 300 million in mid-April to over USD 500 million by June.

Developers have taken notice. After Kimi K2’s release, downloads spiked on Hugging Face, and it quickly climbed OpenRouter’s trending leaderboard. Users, frustrated by initial API bottlenecks, filled forums with complaints about slow or unstable response times.

Kimi K2 isn’t flawless. Its outputs can still be verbose, and its code trails top-tier models like Claude in quality. But it offers outsized value for cost. Independent tests show that a full day’s programming workload with Kimi K2 can cost just a few dollars, marking a win for accessibility.

Part of that value stems from technical innovation.

Kimi K2 introduced a new optimizer, Muon, replacing the commonly used AdamW. Muon reportedly cuts compute demands by nearly half, operating at just 52% of AdamW’s demand on Llama-based models.

Optimizers determine how model weights are updated during training. More efficient optimizers improve training stability and speed, which in turn reduce compute costs. Choosing Muon was a risk. It hadn’t been formally published and had only been used on small-scale models. Kimi scaled it to the trillion-parameter range, overcoming hurdles and making Muon a defining feature of the launch.

Kimi K2’s launch highlights the cascading impact of DeepSeek’s debut.

When DeepSeek-R1 dropped in January, it shifted the global AI conversation. Until then, most Chinese AI startups had been chasing user acquisition with consumer-facing products.

Kimi followed suit, engaging in aggressive paid marketing campaigns to compete with ByteDance’s Doubao. But marketing is a game for large platforms, and Kimi struggled to keep pace. By late 2024, Doubao had over 100 million monthly active users.

Then DeepSeek changed the narrative.

Suddenly, the focus turned sharply to model capability. The consumer AI field narrowed to a few players: Tencent’s Yuanbao, Alibaba’s Quark, and Doubao. Commercialization took a backseat. With tech giants going all-in on model and app development, startups faced a stark choice: go open-source and solve hard problems, or step aside.

At a recent 36Kr event, ZhenFund partner Dai Yusen put it bluntly: “A year ago, everyone was chasing traffic and users. That was a game for big platforms. But now, the focus has shifted back to technical depth and cognitive strength, an arena where founder-led technical teams thrive.”

Since DeepSeek-R1, China’s six “AI dragons” have each charted their own course.

Kimi is gravitating toward Anthropic’s lane, emphasizing programming and agentic capabilities. MiniMax and StepFun are leaning into multimodal models. Zhipu AI is targeting enterprise and government markets. Baichuan AI is building for healthcare. 01.AI has exited foundational model R&D entirely to focus on deployment and application.

Kimi, once relatively obscure overseas, is now drawing global interest. Developers are taking note of CEO Yang Zhilin and the company’s punk aesthetic—staff naming rooms after rock icons and posting candid updates online about late nights and unfinished infrastructure.

Kimi, once relatively obscure overseas, is now drawing global interest. Developers are taking note of CEO Yang Zhilin and the company’s punk aesthetic, with staff naming rooms after rock icons and posting candid updates online about late nights and unfinished infrastructure.

Even before Kimi K2 launched, traffic to Kimi’s web app rebounded 30% in June, an early signal that quality was beginning to cut through.

As one researcher on the team wrote: “In 2025, intelligence ceilings are still determined by models. For a company that claims to pursue artificial general intelligence (AGI), if you’re not pushing that boundary, I wouldn’t stay a day longer.”

The path to AGI may be narrow, but for the startups still on it, it may also be the widest road that remains, as long as they keep their focus.

KrASIA Connection features translated and adapted content that was originally published by 36Kr. This article was written by Deng Yongyi for 36Kr.